We are launching a series of articles by Gian Marco Solas, EU qualified Lawyer and Leading Expert at the BRICS Competition Law and Policy Centre, on the intersection of competition law, AI and sustainable development. "This is a comment about the European Union AI policy within the sustainability realm, the renewed idea of a perpetual motion machine or the best of all possible worlds and an experimental proposal for peace," states the author. The first part of the series focuses on the most relevant provisions of the EU AI Act.

This series of short articles analyses the EU approach to Artificial Intelligence (‘AI’) within the sustainability realm, proposing a “new” mathematical method and model for policy making in AI and more in general. It does so first by briefly describing AI and reporting on some of the most relevant provisions of the EU AI Act. Then, proposing some theoretical and mathematical argumentations for the potential improvement of the AI and other new technologies discourse and usage in the legal and economic context; namely, recalling the complex science and universal ethics approach to policy making. It concludes with an experimental proposal for peace, namely a “cooperative competitive game” to systematically turn ecosystems’ natural entropy into valuable assets and new life on Earth though AI and blockchain powered smart contracts and legal claims.

Part I - “Law”

The European Union AI policy within the sustainability realm

The enactment of the Artificial Intelligence (‘AI’) Act by the European Union, along with a series of accompanying policy measures, marks the beginning of the regulatory path of this fascinating technology worldwide. Roughly two and half years after it was introduced, and following tight lobbying and political efforts, the EU institutions have agreed on the Proposal for a Regulation “AI Act”, which will likely enter into force somewhere at half 2024, with some obligations taking more time to be implemented thereafter. The AI Act and all accompanying studies and initiatives represent a copious work made by the EU institutions in a relatively very short time frame. Seemingly a sense of urgency has motivated all the legislative path as well as the overall approach to shaping the AI Act. Triggered by the potential risks of this ground-breaking and fast or even self-developing technology, the EU institutions in fact proposed a “risk-based”, as well as “human centered” and “ethical” approach. Before delving into the meaning of this approach and provisions of the EU AI Act, a general and brief introduction to AI technologies must be done.

1. Academic and technological definitions of AI

As reality reveals new applications of AI technologies by the day, it is useful to provide some generalistic definitions coming from the academic and technology development context. The term AI was coined by emeritus Stanford Professor John McCarthy in 1955, as “the science and engineering of making intelligent machines”. The IBM website describes AI as “technology that enables computers and machines to simulate human intelligence and problem-solving capabilities”. Google defines it as “a set of technologies that enable computers to perform a variety of advanced functions, including the ability to see, understand and translate spoken and written language, analyze data, make recommendations, and more”. For these reasons, AI is often associated to a generative function, to the extent that it is not only able to read and understand existing data, but also to reproduce ideally intelligent or anyway improved outputs.

2. The EU AI Act and sustainability considerations

Article 3 point 1 (a) of the AI Act states that “‘artificial intelligence system’ (AI system) means software that is developed with one or more of the techniques and approaches listed in Annex I and can, for a given set of human-defined objectives, generate outputs such as content, predictions, recommendations, or decisions influencing the environments they interact with;…”. It then specifies in Annex I the AI techniques and approaches as: “(a) Machine learning approaches, including supervised, unsupervised and reinforcement learning, using a wide variety of methods including deep learning; (b) Logic- and knowledge-based approaches, including knowledge representation, inductive (logic) programming, knowledge bases, inference and deductive engines, (symbolic) reasoning and expert systems; (c) Statistical approaches, Bayesian estimation, search and optimization methods”.

The EU institutions further specify in the Impact Assessment accompanying the AI Act (“Impact Assessment”) that “AI systems are typically software-based, but often also embedded in hardware-software systems. Traditionally AI systems have focused on ‘rule-based algorithms’ able to perform complex tasks by automatically executing rules encoded by their programmers. However, recent developments of AI technologies have increasingly been on so called ‘learning algorithms’. In order to successfully ‘learn’, many machine learning systems require substantial computational power and availability of large datasets (‘big data’). This is why, among other reasons, despite the development of ‘machine learning’ (ML), AI scientists continue to combine traditional rule-based algorithms and ‘new’ learning based AI techniques. As a result, the AI systems currently in use often include both rule-based and learning-based algorithms”. The Impact Assessment also reports that “[a] recent Nature article found that AI systems could enable the accomplishment of 134 targets across all the Sustainable Development Goals, including finding solutions to global climate problems, reducing poverty, improving health and the quality and access to education, and making our cities safer and greener”(1). According to a study by McKinsey mentioned in the same Impact Assessment, “the successful uptake of AI technologies also has the potential to accelerate Europe’s economic growth and global competitiveness”(2).

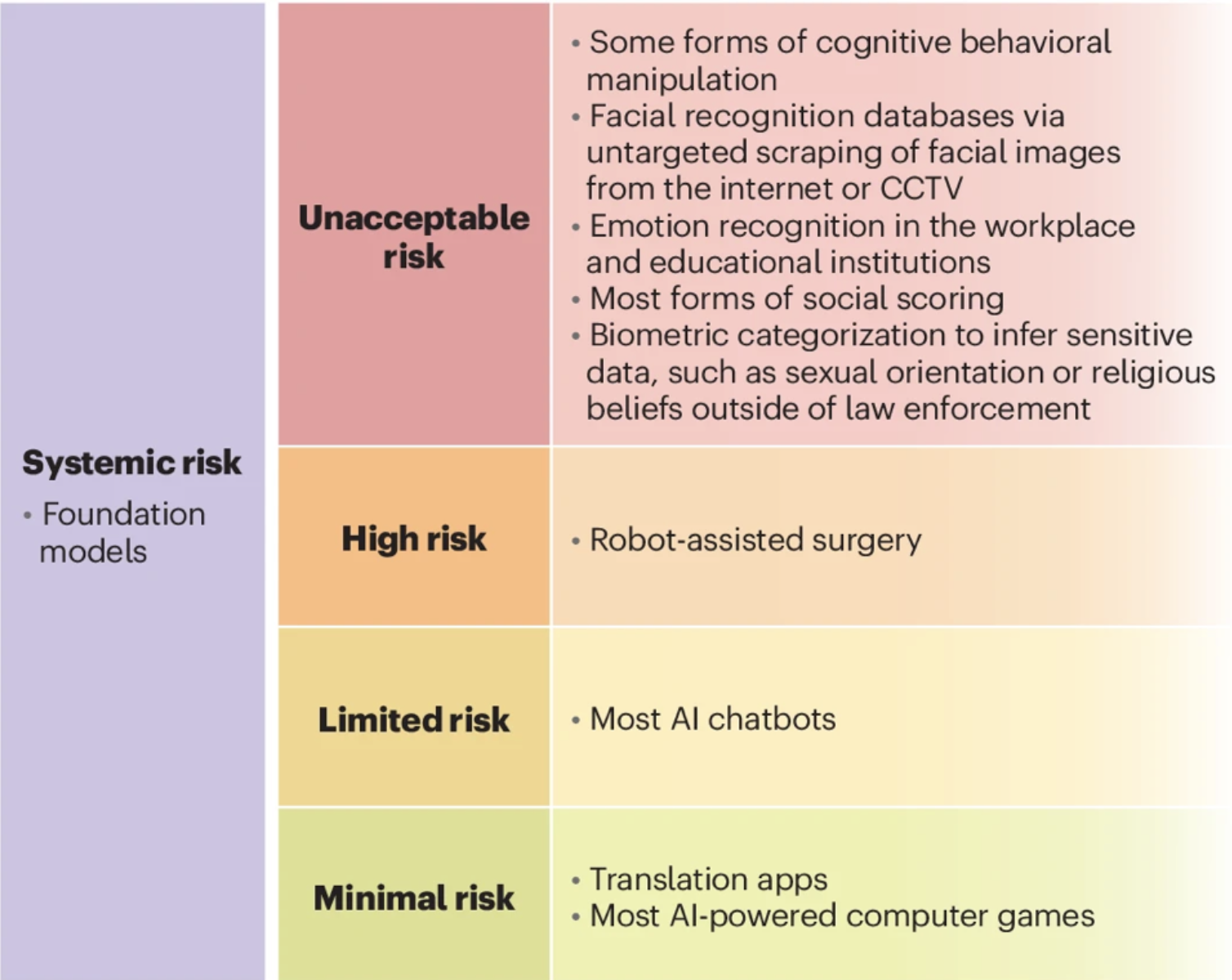

3. Risk-based approach and the “life sciences paradox” in the EU AI Act

Despite this seemingly high socio-economic potential highlighted in the Impact Assessment, and more in line with the complex definition of AI technologies in the Annex I, the AI Act focuses and well defines a so called “risk-based” approach to regulate AI. In other words, it defines AI on the basis of an ex ante assessment of the risk it allegedly poses, as specified in the table below (3). AI technologies that appear to pose unacceptable risk may not be authorized at all. Technologies in the minimal risk category require very little oversight. Most of the regulatory concern is focused on the categories in between, with higher oversight and regulatory requirements for medical AI such as robot-assisted surgery. This, regardless of how it will be used, means that any of such devices would have to comply with high regulatory burden, additional to the one(s) already in place for medical devices or else. Interestingly, AI for the military sector seems not to pose such high systemic risk, as it was was explicitly excluded from the field of application of the AI Act. If assessed under the sustainability realm, one may wonder why companies building autonomous killing drones are not “frictioned” by the EU AI Act regulatory burden, while companies building AI powered robots for surgeries guided from distance by specialists quite are? The question recalls the long-debated and well-known “life sciences paradox”, which refers to the idea that scientists seem to not have found an unanimous definition of life on Earth and its cause, and which paradox clearly urges for additional academic and other efforts. As Poincaré pointed out, it is precisely because according to the laws of nature all things tend toward death “that life is an exception which it is necessary to explain”. As the paradox appears to influence also high level policy making, clearing it out is a categorical imperative that does not seem it could be addressed without including human laws and the principle of universal love in the scientific discourse.

(1) Vinuesa, R. et al., ‘The role of artificial intelligence in achieving the Sustainable Development Goals’, Nature communications 11(1), 2020, pp. 1-10.

(2) From the footnote in the Impact Assessment: ‘According to McKinsey, the cumulative additional GDP contribution of new digital technologies could amount to €2.2 trillion in the EU by 2030, a 14.1% increase from 2017, McKinsey, Shaping the Digital Transformation in Europe, 2020). PwC comes to an almost identical forecast increase at global level, amounting to USD 15.7 trillion, PwC, Sizing the prize: What’s the real value of AI for your business and how can you capitalise?, 2017’.

(3) Picture from the Nature website, see https://www.nature.com/articles/s41591-024-02874-2